In a previous blogpost I've used sliders to generate fluctuating repetitions. The sliders make it easy to understand what the system is doing but it isn't a very natural way to search in the parameter space. The UI was good for explaining but less so for exploring. In this document I'll demonstrate how I compressed these six one-dimensional sliders into one two-dimensional slider using a small autoencoder. The experience is built for pc, mobile users might want to switch devices. The post may also make a lot more sense if you've seen the previous blogpost.

Idea

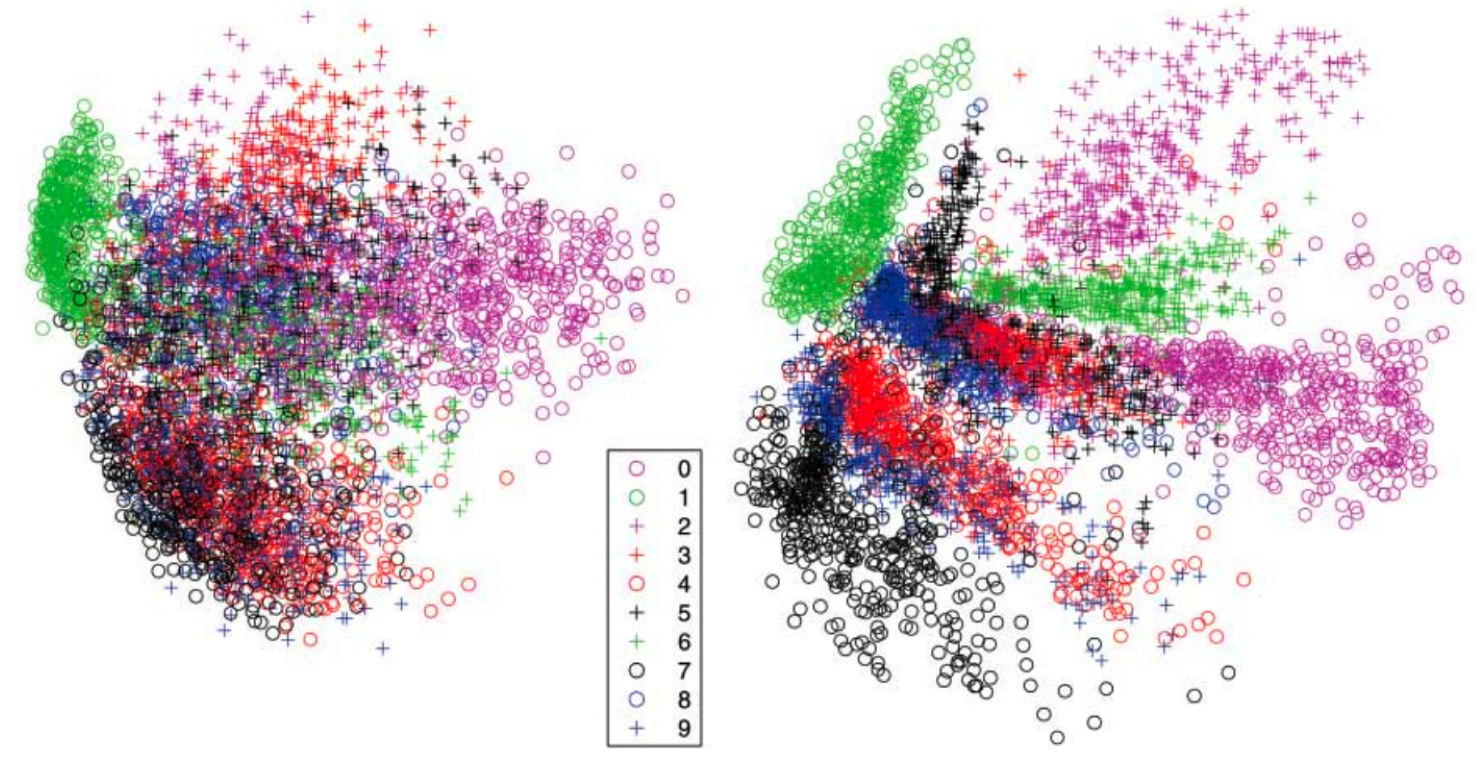

The idea is to use a neural network to map a high dimensional space to a much lower two dimensional space. Most data scientists will have seen the cool autoencoder demo with the MNIST dataset. In particular, this image;

The mnist dataset is very structured which is why you can see numbers clustered together. The idea is to do something very very similar but with slider data as input which for all intends and purposes is regarded as homogenous input without pattern. The question then is; how well can a simple neural network compress this data?

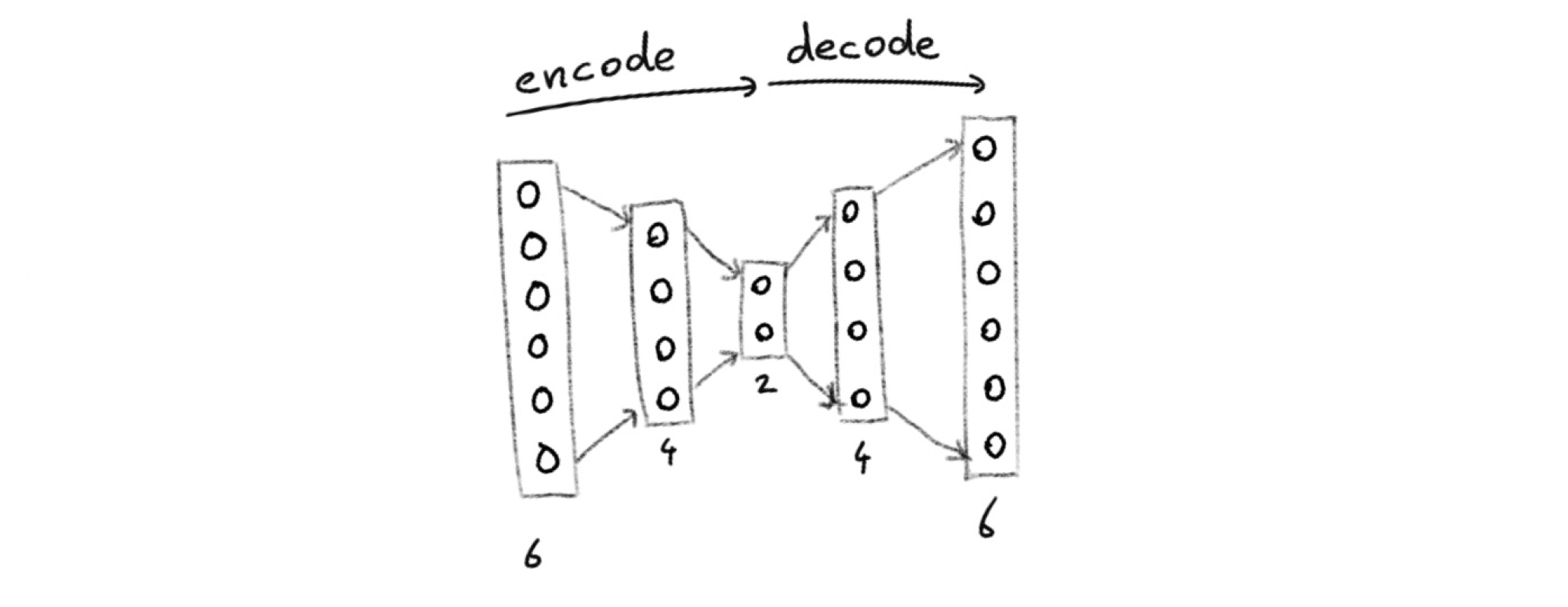

To keep things simple I'll use sigmoid activation functions and a simple feed forward network architecture. I'll try to map the 6 dimensions of the slider inputs

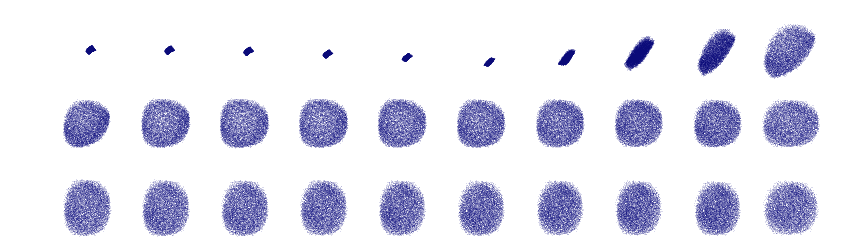

I've made an ipython notebook that is viewable here that implements this. The evolution of the latent feature space is shown below.

It's easy to imagine that some information gets lost here but it seems to spread information around well enough in the latent feature space.

Results

I've applied this algorithm in tensorflow and saved the resulting weights as a json file. This json object is ingested by javascript and is used to define functions that maps data from slider-input-space to latent-feature-space. I've implemented custom ui elements in d3 to allow for easy interoperability. Just by hovering over the ui elements you should be able to see the effect in the corresponding space.

A few things seem to stand out;

- points near eachother in latent feature space map to similar slider allocations

- the feature space doesn't map everything perfectly back to the slider input, especially values close to 0 or 1 seem hard to retreive from the latent feature space

- when playing with the sliders you'll notice some sliders seem to apply a linear relationship, if you play around enoug you should also notice some non-linear mappings

Feel free to play around. I've also added a few fluctuating repetion elements from the previous blogpost.