Edit:

You may not want to read this blogpost on mobile. The d3 stuff can be a bit heavy.

In this document we'll do an experiment to see if humans can generate random numbers effectively. We will use you, a human to do this. Please click the heads/tails button as randomly as you can. You may also use the 1 (heads) or 0 (tails) keys on your keyboards (which probably is a whole lot faster if you are on a laptop).

You've currently generated 0 numbers, please ensure that you got about 100 before moving on.

You are likely to cheat if you scroll down, which is fine when you try this a second time but it would be best to do a clean attempt beforehand.

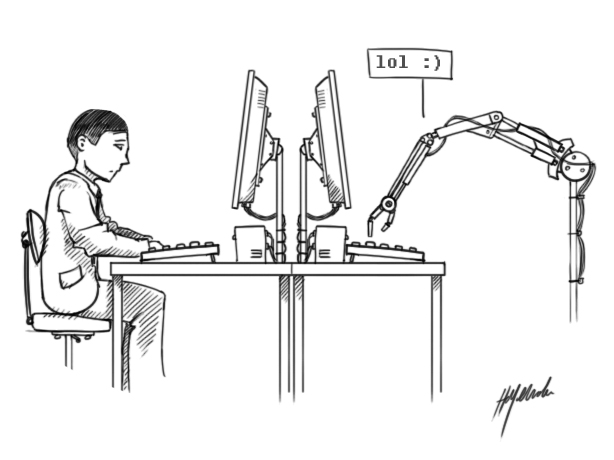

Inverse Turing Test

Let us do an inverse turing test.

You've just given your sequence of 'random' numbers. There are many axes for judging if you have given random data. In this document we will focus on the markov chain that we can learn from the generated input. The counts for these markov models are graphed below. You may see no bias in the first or second chart, but as you scroll down it may become more and more biased to a certain pattern.

Often, humans fall into a pattern instead of delivering true randomness. Especially repeating 0-1-0-1 or 1-0-1-0 is common. Using the barcharts it may become evident if this is the case.

Probability of predictions

Let us go a step further. It is well possible that you are such a terrible random number generator that an actual machine can predict your 'randomness'. We can use the counts from before to generate simple markov models $M_k$ (where $k$ the number of steps we look back). Each markov chain can then say how likely it is to see a heads (or a 1) given the last $k$ coinflips we've seen (this is denoted by $x_{-k}$).

$$ P_{M_k}(H | x_{-k}) $$

We can combine these models. We train $M_1,...,M_5$ in real time, because it is just counting on a stream, and combine these via a naive ensemble rule.

$$ P(H|x) \approx \prod_{i=1}^5 \frac{P_{M_k}(H | x_{-k})}{P_{M_k}(H | x_{-k}) + P_{M_k}(\neg H | x_{-k})} $$

If the math confuses you; we just average out what each independant markov chain thinks. This is a blunt model, especially because we're sticking to discrete-land while a beta distribution would be much better here. Still, this should be able to pick up a lot of common human patterns. We'll also introduce some smoothing in the beginning to prevent a very early overfit. We encourge the reader to try and changing their pattern a few times to see how the markov chains respond.

When we do this the predictions over time are show below. The lines $p_1, ..., p_5$ correspond to the predictions of markov chain $M_1,...,M_5$ and pred corresponds to the prediction from $P(H|x)$.

The accuracy of this naive ensemble is depicted in the plot below. We show the total accuracy as well as the accuracy of the last 15 predictions. We do this to also demonstrate how the markovs can learn new patterns.

Conclusion

So with these numbers, how random might the supplied data be? Well, if the data truly was random then the number of correct predictions needs to come from the following distribution;

$$ P(a | H_0) \sim Bin(\frac{1}{2}, n) \sim {n \choose k} p^k (1-p)^{n-k} $$

This means that our found accuracy can help us determine how likely it is that the data is generated randomly. In maths, with the given data; $\sum_i P(H_0 | x_i \geq a) = $ 0.5. This is by no means the only axis where we can measure randomness, but it is able to filter out a lot of human behavior.

Mainly, coding this was a lot of fun.

Bonus: What would a robot do?

You may be wondering what the result is if an actual robot filled this in. Press the button below to find out.

Shoutouts

This document was created together with @jbnicolai_, props to him!