I heard a cute anecdote that reminded me on the importance of solving the right problem. I am rephrasing the actual story in order to make a general point.

Imagine

Imagine that you’re a ranger and that it is your job to protect elephants from poaching. A large part of protecting the elephant herds is understanding the movement patterns them in a national park. Elephants are beautiful creatures, but they don’t like having sensors put on them. Instead it turned out to be possible to put up a huge array of microphones in the park. The idea has many benefits; you could detect sounds of many animals, including elephants, by recording over time and since the microphones are stationary you could even power them via solar.

There’s only one problem; the hundreds of these microphones will produce a lot of audio, too much to listen to. Thankfully, there is a Phd who is willing to write a thesis on the topic of detecting the audio of elephants. The Phd goes off, imports the libraries, let’s the tensors flow and after a week comes back with a highly accurate model; 100% accurate, cross-validated and bound to be a huge time-saver.

This result amazes the national forest’s investors and quickly becomes the prime focus of the year in terms of budget. All the rangers got an app that would tell them where they would need to go to find elephants.

Then the field tests occur and it suddenly turns out that … the model actually isn’t 100% accurate in real life. About 20% of the time there are no elephants when the algorithm says that there should be. The rangers never got the audio clips, only the predictions, so they could never really varify what happened. All they observed was that the elephants weren’t there.

What happened?

If you’re in the field of data science, an alarm bell should be ringing. It seems plausible that the dataset that was used during training came from a zoo that does not represent the elephants in the wild. This is a very fair thing to point out and is certainly something that deserves attention.

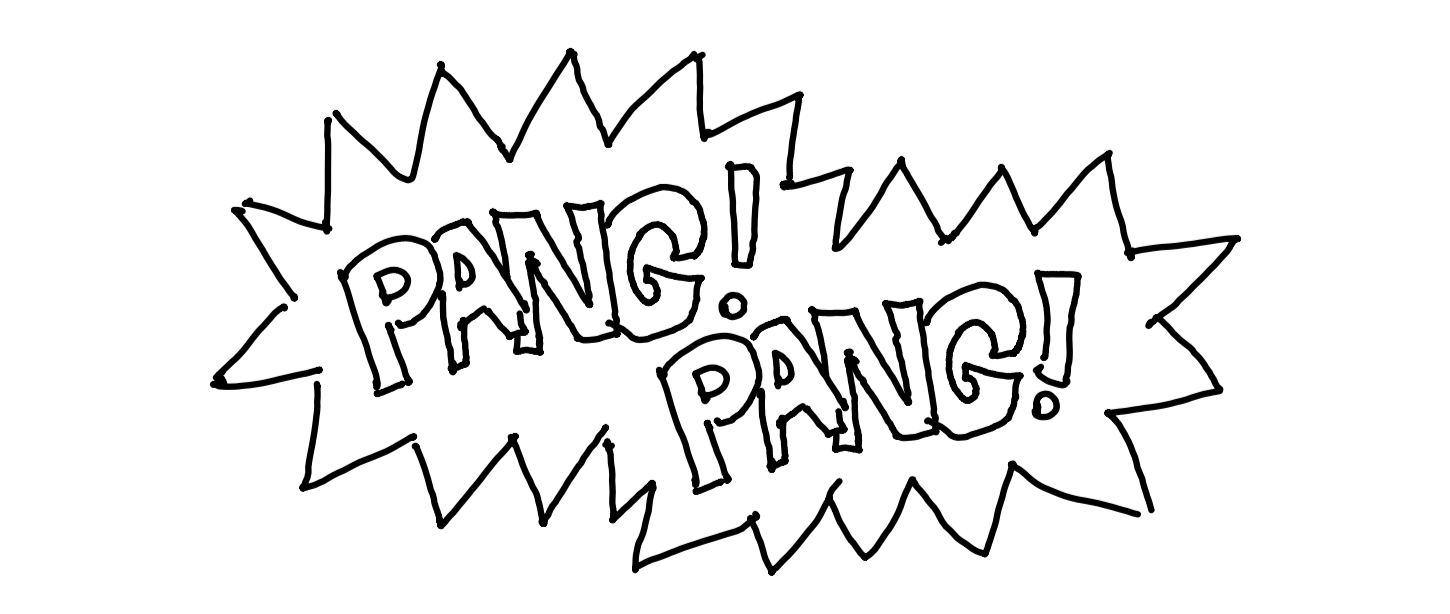

But maybe, the problem is that you shouldn’t be looking for elephant sounds in the first place. You should be listening for gunshots. Not only are they easier to detect, they are also the more pressing observation to react to.

Slight Danger

In this example it would have helped if the rangers didn’t just get the app but also were active participants in the labelling of the data. Rangers might recognize that there’s a more important label missing from the algorithm.

But maybe the more human part of the problem here was that the high original accuracy was distracting the investors from more effective things that could be done. It makes sense to think “we can predict this accurately, let us focus and look for an application!” but this should never prevent you from considering the more low hanging fruit first.

You don’t want to be the person who is pointing to elephants that are not in the room.