This document contains an exercise in combinatoric search as well as a bit of probability theory. It was inspired by an episode of Sherlock Holmes.

The problem

You're helping out Sherlock Holmes. He has intercepted messages from his arch nemesis and he needs them decyphered. You know that the bad guys have a substitutional cypher. Each letter in the alphabet is mapped to another letter but you do not know the mapping. How would you go about translating them?

Try to translate the following messages. They each have a different cypher.

Secret Message 1

rb vzlk lkw vbcmn vqlokzhp vkql kqr lkw ozlj bs nbhw lb ucwuqcw sbc eawcr ehucwownwhlwn lwrl lkw qhrvwc zr hbl feok lkwcw kqiw awwh hb ueamzo rwcizow qhhbehowfwhlr bc nwfbhrlcqlzbhr bs lkw lwokhbmbpj wtowul sbc lkw fqjbc qhn bhw ubmzow bsszozqm hb blkwc lbu ozlj mwqnwc kqr rwwh q rwmsnczizhp eawc iwkzomw buwcqlw eu ombrw szcw qhn wfwcpwhoj rwcizowr nbhl yhbv vkwcw lkw eawc oqcr vzmm lcqiwm zl zr ucwozrwmj lkzr kqhnrbss quucbqok lkql kqr fqnw uzllraecpk znwqm pcbehnr sbc bhw bs rzmzobh iqmmwjr abmnwrl wtuwczfwhlr qhn zl kqr zphzlwn oczlzozrf lkql lkw ozlj zr pzizhp qvqj zlr ywjr lb eawc vkzok zr lwrlzhp q hqrowhl lwokhbmbpj qhn kqr q cwuelqlzbh sbc cehhzhp cbepkrkbn biwc cwpemqlbcr qhn fehzozuqmzlzwr zlr hbl bec cbmw lb lkcbv eu cwpemqlzbhr bc mzfzl obfuqhzwr mzyw eawc rqzn azmm uwnelb uzllraecpkr fqjbc vkb rqzn lkql eawc umqhhwn lb erw qabel fbnzszwn ibmib rubcl elzmzlj iwkzomwr sbc lkw uqrrwhpwc lczqmr lkw iwkzomwr vzmm qmrb kqiw q kefqh fbhzlbc awkzhn lkw vkwwm jbe oqh wzlkwc uel eu cwn lquw bc cbmm bel lkw cwn oqcuwl zs jbe vqhl lb aw q rlowhlecj mqabcqlbcj sbc lwokhbmbpj jbe uel bel lkw oqcuwl lkw fqjbcr fqhlcq kzpkmzpklr vkql zl lqywr lkwrw nqjr qr ozlzwr rwwy lb rkwn lkwzc cerl awml uqrlr qhn lcqhrsbcf lkwfrwmiwr zhlb lwokhbmbpj kear wrrwhlzqmmj pziw lkw lwok obfuqhzwr mblr bs scww cwzh lkw quucbqok nwroczawn qr pcwwhmzpkl pbiwchzhp zr bhw lkql uzllraecpk qhn lkw rlqlw bs uwhhrjmiqhzq kqiw heclecwn biwc lkw mqrl swv jwqcr vkwh eawc vqhlwn lb wtuqhn zlr cwrwqcok qcbehn qelbhbfber iwkzomwr uzllraecpk kwmuwn lkw obfuqhj mwqrw q mqcpw umbl hwqc lkw ozljr cziwcscbhl sbc q lwrlzhp lcqoy vkwh rlqlw cwpemqlbcr lczwn lb aqh cznwrkqczhp rwcizowr zh uzllraecpkr fqjbc qhn lkw rlqlwr pbiwchbc kwmuwn aql lkbrw kecnmwr nbvh blkwcvzrw uzllraecpkr ubmzlzozqhr rlqj bel bs lkw vqj

Secret Message 2

lkzr zr q rwhlwhow lkql jbe obemn wqrzmzj cwqn zs jbe yhbv kbv lb lcqhrmqlw zl awobfwr feok wqrzwc zs jbe kqiw fbcw lwtl

Secret Message 3

awqelzsem zr awllwc lkqh epmj wtumzozl zr awllwc lkqh zfumzozl rzfumw zr awllwc lkqh obfumwt obfumwt zr awllwc lkqh obfumzoqlwn smql zr awllwc lkqh hwrlwn ruqcrw zr awllwc lkqh nwhrw cwqnqazmzlj obehlr ruwozqm oqrwr qcwhl ruwozqm whbepk lb acwqy lkw cemwr qmlkbepk ucqolzoqmzlj awqlr ueczlj wccbcr rkbemn hwiwc uqrr rzmwhlmj ehmwrr wtumzozlmj rzmwhown zh lkw sqow bs qfazpezlj cwserw lkw lwfulqlzbh lb pewrr lkwcw rkbemn aw bhw qhn ucwswcqamj bhmj bhw baizber vqj lb nb zl qmlkbepk lkql vqj fqj hbl aw baizber ql szcrl ehmwrr jbecw nelok hbv zr awllwc lkqh hwiwc qmlkbepk hwiwc zr bslwh awllwc lkqh czpkl hbv zs lkw zfumwfwhlqlzbh zr kqcn lb wtumqzh zlr q aqn znwq zs lkw zfumwfwhlqlzbh zr wqrj lb wtumqzh zl fqj aw q pbbn znwq hqfwruqowr qcw bhw kbhyzhp pcwql znwq mwlr nb fbcw bs lkbrw

If you scroll down I'll demonstrate my version of a solution. If you've never solved something like this before I urge you to try it. It's an amazing toy problem to get to learn the base types of a programming language.

The Inference Part

Let's first make a mapping and load in some libraries.

import pandas as pd

import numpy as np

from cytoolz import sliding_window

import itertools as it

from collections import namedtuple, defaultdict

import matplotlib.pyplot as plt

%matplotlib inline

char_range = range(ord('a'), ord('z') + 1)

random_map = np.random.permutation(range(ord('a'), ord('z') + 1))

translator = defaultdict(lambda: '', {chr(char_range[i]):chr(random_map[i]) for i,j in enumerate(char_range)})

translator[' '] = ' '

sent = 'hello world you can read me'

mapped_sent = ''.join([translator[c] for c in sent])

Depending on your random numbers you may get something else, but I got this:

kwmmb vbcmn jbe oqh cwqn fw

To translate this, we could do a few things. We could look at the most frequent letter that occurs in the crypted message and infer that that should be mapped to the most frequent letter in the english language. We can repeat this a few times perhaps but depending on the text this might be rather hard.

You could also look at it a bit more probibalistically.

You may have an hypothesis, for example: a $\to$ b (this reads: 'a' maps to 'b'). You'll want to quantify how likely it is that a mapping is correct such that you can compare two mappings.

As luck would have it, this probability is known to us given that the characters we see are from the english language. It is much more likely to view the letter 'a' than it is to view the letter 'q'. If the mapping that we are considering gives us a translated text with many q's then we know that the translation is probably not correct.

We can do this for single letters but we can also do this for bigrams. Here too we can argue that it is much more common than zq. Via the same line of thought we can also use bigrams to determine which translation is best.

In the code below I'll be downloading the letter frequencies for the english language. You can easily find these by googling.

df_mono = pd.read_csv("http://koaning.io/theme/data/monograms.txt", sep = " ", names = ['char', 'n'])

df_mono['p'] = df_mono['n']/df_mono['n'].sum()

mono_probs = {i[1].char.lower(): i[1].p for i in df_mono.iterrows()}

df_bigrams = pd.read_csv("http://koaning.io/theme/data/bigrams.txt", sep = " ", names = ['char', 'n'])

df_bigrams['p'] = df_bigrams['n']/df_bigrams['n'].sum()

bigram_probs = {i[1].char.lower() : i[1].p for i in df_bigrams.iterrows() if i[1].char is not np.nan}

bigram_probs = defaultdict(lambda : 0.000001, bigram_probs)

ngram_probs = {

1: mono_probs, 2: bigram_probs

}

As an example, this is what mono_probs looks like:

{'a': 0.08551690673195275,

'b': 0.016047959168228293,

'c': 0.03164435380900101,

'd': 0.03871183735737418,

'e': 0.1209652247516903,

'f': 0.021815103969122528,

'g': 0.020863354250923158,

'h': 0.04955707280570641,

'i': 0.0732511860723129,

'j': 0.002197788956104563,

'k': 0.008086975227142329,

'l': 0.04206464329306453,

'm': 0.025263217360184446,

'n': 0.07172184876283856,

'o': 0.07467265410810447,

'p': 0.020661660788966266,

'q': 0.0010402453014323196,

'r': 0.0633271013284023,

's': 0.06728203117491646,

't': 0.08938126949659495,

'u': 0.026815809362304373,

'v': 0.01059346274662571,

'w': 0.018253618950416498,

'x': 0.0019135048594134572,

'y': 0.017213606152473405,

'z': 0.001137563214703838}

We'll now define some functions that help use map these probabilities to translated texts such that we can say something about how likely it is that the mapping is correct.

Note in the code below I'll be using the np.sum(np.log(.))- trick. This is because I'm interested in comparing the maximum allocation of multiplied probabilities and this is proportional to the sum of it's logarithms.

$$ \arg\max \prod_i x_i \propto \sum_i \log(x_i) $$

Don't worry if you need a reminder in maths, but do look it up. It is a trick that is used often.

def gen_random_mapping():

rand_perm = np.random.permutation(range(ord('a'), ord('z') + 1))

newmap = defaultdict(lambda: '', {chr(char_range[i]):chr(rand_perm[i]) for i,j in enumerate(char_range)})

newmap[' '] = ' '

return newmap

def flatten(listOfLists):

return ["".join(_) for _ in it.chain.from_iterable(listOfLists)]

def likelihood(sentence, mapping, n_gram = 2):

mapped_sentence = "".join([mapping[_] for _ in sentence if _ != ''])

tokens = flatten([sliding_window(n_gram, _) for _ in mapped_sentence.split(" ") if len(_) >= n_gram])

return np.sum(np.log([ngram_probs[n_gram][_] for _ in tokens]))

def inverse(mapping):

return {value:key for key, value in mapping.iteritems()}

Just to check if these functions work, let's check some likelihoods.

> mapping2 = gen_random_mapping()

> mapping3 = gen_random_mapping()

> (likelihood(mapped_sent, inverse(translator), n_gram=1),

likelihood(sent, mapping2, n_gram=1),

likelihood(sent, mapping3, n_gram=1))

(-65.021911751158058, -89.545946415367993, -84.170097431125299)

> (likelihood(mapped_sent, inverse(translator), n_gram=2),

likelihood(sent, mapping2, n_gram=2),

likelihood(sent, mapping3, n_gram=2))

(-83.555793714378353, -143.68381695617177, -135.69826990674542)

The likelihoods make sense. We can confirm that the true translation has the highest log likelihood value so we have a means to compare mappings. We know what to optimise over, we will now concern ourselves with the search space.

The Combinatorial Part

The inference problem has now turned into a search problem. The easy thing would be to just pick the most likely allocation based on the likelihood by trying out all combinations but the search space is in the order of $26!$.

Can we do this on a single machine or do we need to resort to a heuristic?

> np.math.factorial(26)

403291461126605635584000000L

Looks like we need to be a bit more clever. Let's first try a greedy search, that's easy to implement. We can update a mapping by switching any of the letters in the mapping. We can repeat this with a bit of brute force but this should significantly reduce the search space.

def switch(char1, char2, mapping):

mapping[char1], mapping[char2] = mapping[char2], mapping[char1]

data = []

curr_likelihood = -99999

letters = 'abcdefghijklmnopqrstuvwxyz'

Log = namedtuple('Log', 'mapping, score')

search_mapping = gen_random_mapping()

# dirty triple for-loop but works

for i in range(10):

for char1 in letters:

for char2 in letters:

switch(char1, char2, search_mapping)

if likelihood(sent, search_mapping) <= curr_likelihood:

switch(char2, char1, search_mapping)

curr_likelihood = max(curr_likelihood, likelihood(sent, search_mapping))

data.append(Log(search_mapping, likelihood(sent, search_mapping)))

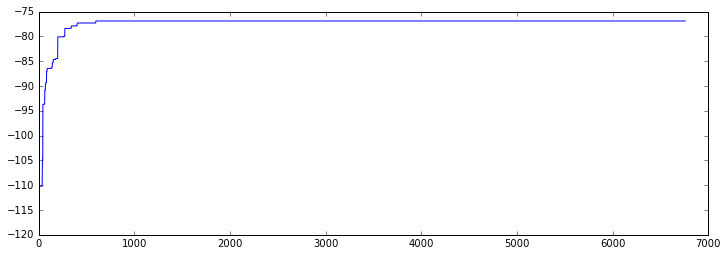

We can check the learning rate to confirm that the greedy search has converged.

plt.plot([_.score for _ in data])

Interested in reading the text that comes out?

>''.join([best.mapping[c] for c in sent])

'hello world you can read me'

Et Voila! A bit of probability theory and greedy search saves the day. I was originally wondering if I needed to do something more clever than a greedy search but after having tried it on a bunch of texts it seems like it works and doesn't need further improving.

You can confirm that this script will also translate the three texts supplied in the beginning.

If only Sherlock had Python...