Back when I lived in Amsterdam, my girlfriend and I would often play card games while getting a coffee on a sunday. It is a good excuse to explore local coffeehouses as well as a nice habbit to get away from the laptop. Our favorite card game turned out to be sushigo and at some point we got rather competitive. This got me thinking; can I apply some quick search strategies to come up with a simple hack that helps me determine what cards to play in sushigo?

How the Cardgame Works

There are some details that I'll be skipping but the gist of the game is as follows.

- To start the game you need to take 10 random cards for each player. The game is played in rounds.

- Every round every player takes a card from their hand and puts it on the table, face down.

- Next you and your opponent switch hands and you flip the card you've just played. You can now see the hand that your opponent had last round and all the cards that you've put on the table in previous rounds.

- You keep on playing until no cards are left.

- Depending on the cards you and your opponent has the scores get determined.

There are two main components of the game that make it fun:

- There is hidden information in the game but you may be able to infer the strategy of your opponent depending on the cards that are picked as the round progresses.

- Certain cards immediately give you points while other cards take a while. If you have 3 sashimi cards then you'll get 10 points, but you'll get 0 points for less. This means that there are high risk/high reward strategies possible.

Every round consists of balancing these two phenomenon.

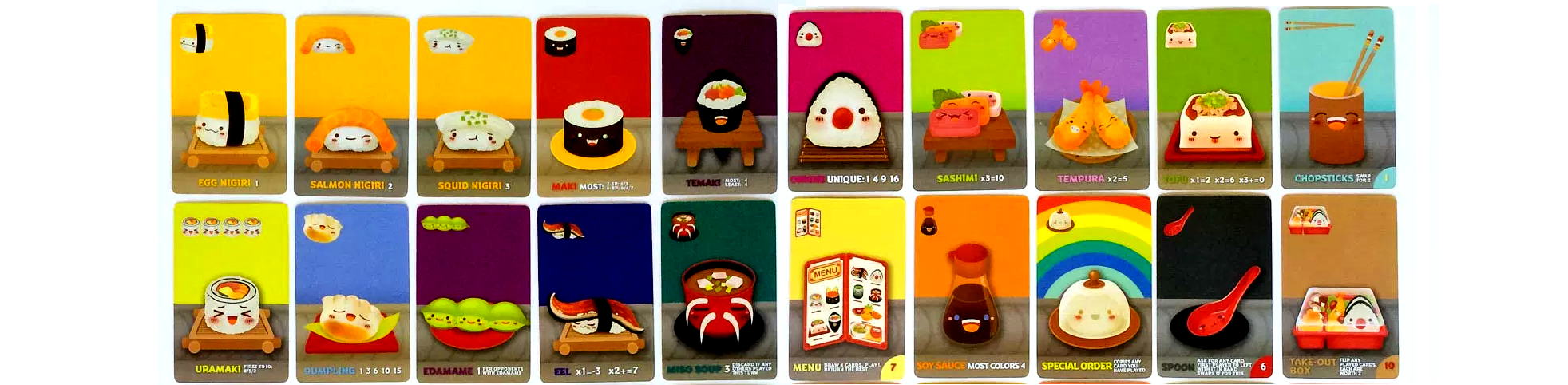

Card Details

I'll zoom in a few cards such that the mechanic of points is clear, full details can be found in the official rulebook. By explaining how some of the cards below work, I hope that it is clear how certain strategies may emerge when you're trying to win the game.

Maki Cards

If you have the most maki points at the end of a round you get +6 points. If you're the second highest then you get +3. If you have no maki cards a you only get +0. If there's a lot of maki cards to go around, you'll probably not care too much but if there's only a few of them then you may want to make sure you've got at least some.

Eel Cards

If you have only 1 eel you lose 3 points, if you have two you get +2 and if you have 3 or more you get +7. It would be great if there's only one eel in the round that it lands at your opponents side.

Shashimi Cards

For every set of three you get 10 points. Any incomplete set is worth 0 points. If you see that your opponent has two shashimi's then you'll want to prevent that she/he gets the final one.

Nigiri Cards

Nigiri cards immediately give you points. An egg nigiri gives you one point, a salmon nigiri gives you two and a squid nigiri gives you three. If you have wasabi on the table before you play a nigiri card the score of that nigiri card may be multiplied by 3. That means that if you play your cards right that you get can 9 points (wasabi + squid nigiri) by playing just two cards. Alternatively, if you see your opponent putting down a wasabi card then you'll want to ensure that no squid is returned to their hand.

Towards a Computer Science Problem

This game can get quite deep, but let's be clear: I'm not interested in anything fancy or complex like building a deep reinforcement learning algorithm to play this game. Two reasons.

- I want to build something quick as I'm doing this on a weekend.

- I want to get better at the card game myself without the aid of a laptop. My girlfriend would justifiedly consider it cheating if I needed to consult the terminal at ever decision I need to make.

With this in mind I'd prefer to just answer a simple question: what cards are the most valuable? Ie. can I order the cards in terms of value? To make things even more simple, let's not consider the rock-paper-scissors aspect of game strategies and lets just pretend that we're playing an opponent that plays random cards. I'll also assume that the game only has two players (as my girlfriend is my main opponent here).

Search Space

Unfortunately it seems like I cannot apply full enumeration as the number of permutations of cards that exist is big.

Suppose that I'll only take these 14 cards into account:

cards = ["maki-1", "maki-2", "maki-3", "sashimi",

"egg", "salmon", "squid", "wasabi", "pudding",

"tempura", "dumpling", "tofu", "eel", "temaki"]

Taking into account all the possible orders of these cards would yield something like:

$$ 14! = 87,178,291,200 \text{ combinations}$$

Obvious Improvement

The performance of any search algorithm can be improved by applying some basic rules of the card game.

A maki card with three maki's on it is obviously better than a maki card with two. A similar rule can be applied for nigiri cards (egg < salmon < squid). If you take this into account then suddenly the search space goes down immensely;

$$ \frac{14!}{3!3!} = 2,421,619,200$$

The search space is still huge, but much much smaller.

Evaluation Time vs. Serverless Sampling

It's not just the search space that is very big but the evaluation time is very large as well. Suppose we want to evaluate a certain ordering of cards. We could take the ordering and simulate many games against a player with a random ordering. Preferably we'd simulate a lot (a lot!) of times in order to remove noise in our performance measure. This means that not only the search space is very big but the evaluation time is time-consuming as well.

This looks like a CPU bound problem. If possible, I'd get 1000s CPU's that I can pummel in order to run this simulation. It feels annoying to provision docker containers for this so I was wondering what backend might be appropriate.

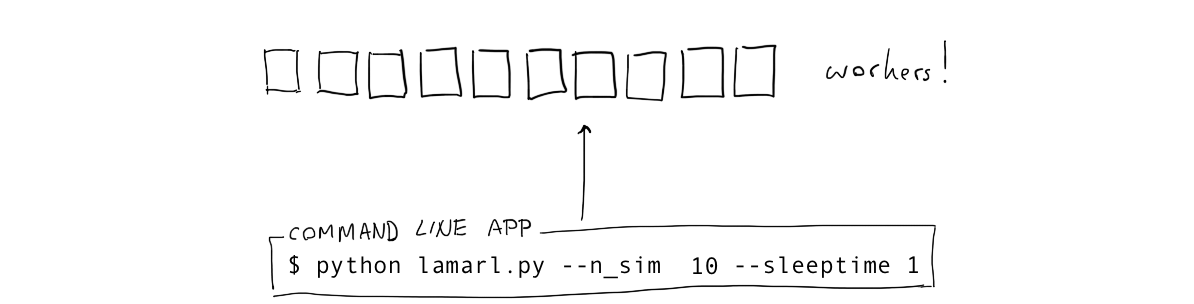

And then it dawned on me that AWS Lambda might actually be feasible for this. A single simulation might cost a minute of time but if this can run asynchronously then I can make a command line app such that it should feel as if I am evaluating card orders in parallel. I decided to try the concept before writing the simulation. I made a simply command line app that can call a backend asynchronously and I made a lambda function that calls time.sleep in python and returns some information about the run (things like thread-id, ip-adress, that sort of fun stuff).

I could run 10 functions.

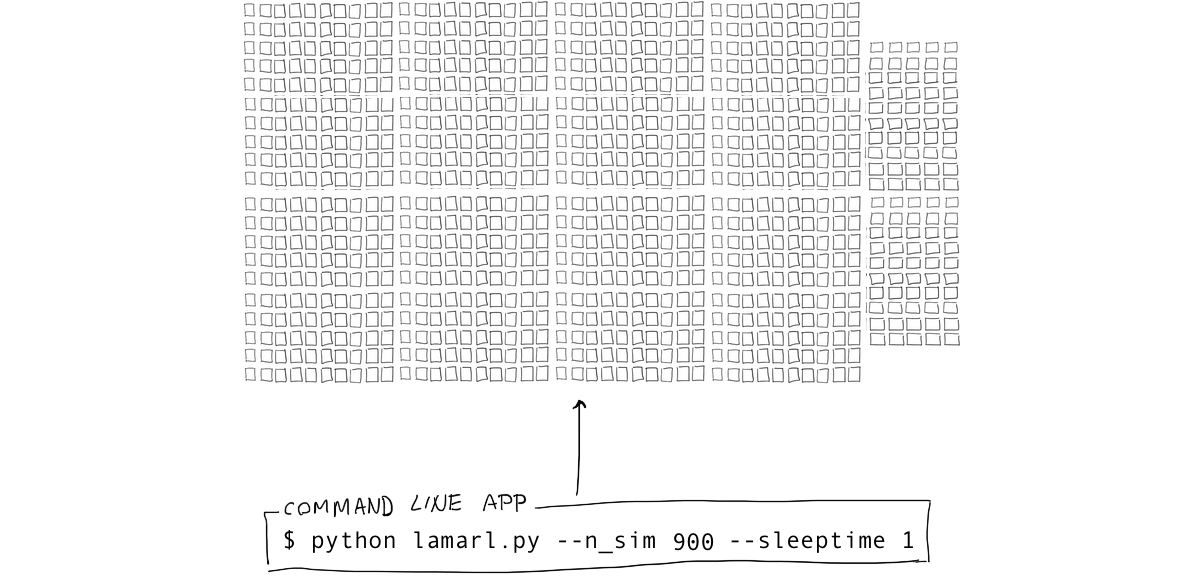

I could run 900 functions.

It worked like magic but I noticed things started breaking when you get near 1000 functions. Some functions will return with errors because Amazon protects you a bit here. You can call them if you need more resources if you need to though.

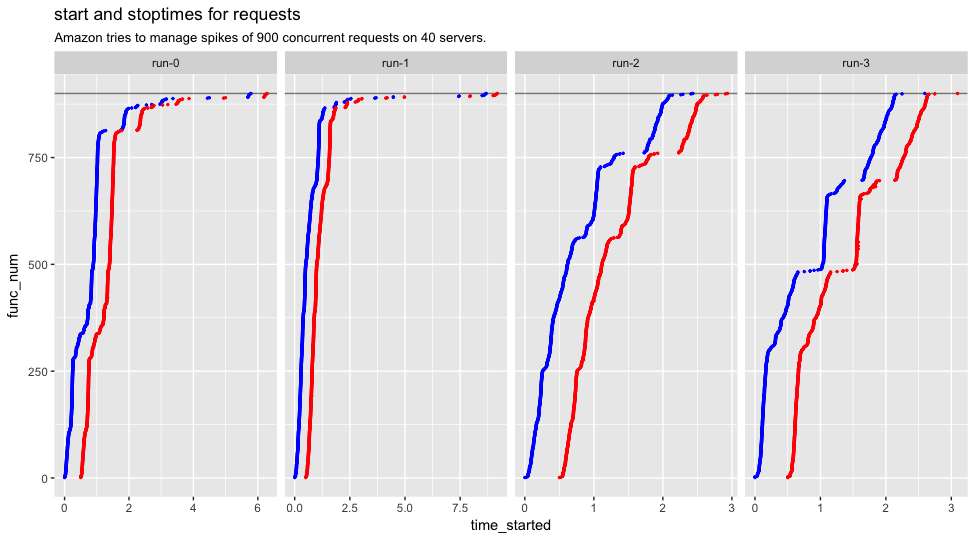

By playing around with this, I was able to make this pretty chart.

You can see different runs on the same lambda function. Each run represents a different call to my command line function that calls AWS. Note that AWS gets 900 concurrent calls instantly so it is fair if there is a bit of a cold start here and there. Also note that the x-axis is different for each run. The blue dots denote a start of a function while the red dots denote the end of one.

There is a bit of startup time before all the functions start, but all in all the overhead is not too bad considering:

- Whatever overhead is compensated by 1000x concurrency.

- Three seconds of overhead for a simulation that takes 2s in total is a lot, but it is much less of a problem when the simulation takes 30s-100s.

- Getting this running on AWS takes 5s from the command line, no need to deal with servers which is a massive timesaver.

- It costs very little. It actually takes effort, even with simulations, to get past the free tier.

Code

The reason why this is so easy to set up is becayse of a python library named chalice. With it getting a scalable simulation service is easy. In fact, this is all the code.

With code like this, chalice will handle the routing, api-gateway and provisioning by simply running chalice deploy from the command line. The nice part is that these lambda functions will scale. Without anything devops (I've never written a single line of cloudformation) I should be able to run up to about 1000 simulations in parallel if I can call this endpoint asynchronously. As luck would have it, python3.6 has great support for this so locally I've created a command line that can call amazon with aiohttp. The gist of that command line works as follows:

Results

So, this is one of those situations where I didn't really care about the destination because the path itself was going to end up being very fun. I've dabbled a bit with a genetic algorithm client side, while the simulation happens in the cloud. It seems that I've found an order of cards that wins about 60% of the time.

A few things to keep in mind with this result:

- My "bot" is playing against really stupid bots. Winning 60% of the time from somebody who just does something randomly is not a result to be proud of.

- Playing a card based on a strict order of cards is a bad strategy. You're ignoring all information that has to do with the game state.

- Something like a reinforcement approach should work a lot better but my girlfriend wouldn't allow me to use a laptop while playing the game.

What I really enjoyed was discovering that, yes, you could use AWS Lambda as a backend for simulations. It actually works in a very cost effective way. Not every simulation would fit this setup though, but I like the thought that simulations can be seen as a mere IO operation from the command line that we can run concurrently.

Giggle

I should mention that I have access to some AWS partners at the office who knew how to call the right AWS people. We wanted to go a bit overboard and we were wondering if we could get more CPU's to play with. There's something very entertaining about that phonecall with AWS when part of the conversation goes like:

"oh hey it's you. sure, here's 3000 CPU's. what will you use it for? a card game? really? well, sure, i guess."

-- AWS Representative

Conclusion

If you haven't done so already; buy sushigo, it is fantastic.